Gotta go fast

Advent of code is now a pretty popular yearly event, where every day from December 1st to 25th, there is a new two-parts problem to solve. The story is delightfully weird and convoluted, and the problems are all pretty small and self contained. I found that it's a great way to level up in a programming language. Once you are familiar with the basic syntax and concept, these problems are really good practice to become intermediate, without being tangled in complexity or library hell.

The official leaderboard is about solving the problems as fast as possible, which doesn't really interest me, compounded by the fact that they are revealed at 5am in my timezone. So I remembered this post of someone solving last year's advent of code under 1s total. Of course it's highly dependent on the underlying hardware, and a completely arbitrary goal, but hey, everyone needs goals.

The results

For a total cumulative time of about 650ms.

This is what I get when I ran the code on my laptop with an i5-4210U CPU @ 1.70GHz. I started on my desktop with an older but faster cpu (i7-3770 @ 3.40GHz), but had to finish on my laptop because of covid and travel complications. And the difference in frequency is very noticeable.

This year I went with rust, because it's a really good fit when the goal is raw performance. I'm also really enjoying this language as I think it has a ton of good stuff going for it, but that's a tengeant.

Take aways

In decreasing order of importance, here are the things I found worked (or not).

Benchmark !

Having a quick way to benchmark the various problems is the first step. Many times I tried "optimisations" which made the code slower and was surprised by that. Rust comes with the excellent criterion crate to easily run benchmark while taking care of some pitfalls inherent to benchmarkings.

Flamegraphs: meh

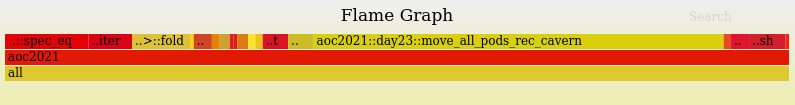

Also I thought it would be a breeze to optimize the problems using cargo flamegraph. However, I often ended up with things looking like

Because the compiler aggressively inline and transform the code, for small problems like that, the flamegraphs aren't super useful in general.

One exception however is the mention of allocation and free. When these show up significantly in the flamegraph, I know I'm allocating too many intermediate objects. This led to some improvements.

Parsing !

As usual with advent of code, quite a few problems require parsing. Although it's probably possible to do everything with regexs, I used this as an opportunity to master nom a parser combinator library. I remember haskell's monads really clicking for me when using megaparsec for the first edition of advent of code, so this was a nice throwback. The lack of do notation in rust makes using nom a bit more awkward but once you get the hang of it, it's really not that bad.

Do less work

By far the best way to be fast is to do less work. And that means being somewhat clever in solving the problems.

For example, in day 19, a basic bruteforce solution took me more than 10s. But using distances to narrow down the possible solutions, I decreased the run time to about 200ms. And then, further using insight about distance to narrow down further and doing a lot less work, it now runs in less than 10ms.

Another recurring theme is about memoization. Some problems like day 21 and day 23 are similar to graph (or tree, but that's a graph) traversals. Using dynamic programming or memoizing the states drastically cuts down the search space. For day 21, I went from 1.6s to 24ms 🤯.

day21 time: [24.124 ms 24.140 ms 24.164 ms]

change: [-98.517% -98.516% -98.515%] (p = 0.00 < 0.05)

Performance has improved.

Arrays > HashMap

In a few cases, like for day 5 the problem is about a grid which changes from iteration to iteration. Often the grid is sparse, with many slots empty. One way to represent the grid is with a hash map (or btree map), and another is with a vector. For 2D grids, indexing and managing out of bounds indices is more natural with a map, and requires some more computation for vector. However, a vector is tremendously faster to access elements. For these problems, no input generated too huge arrays, so the memory was never really a concern.

Here is what I got when I switched from a map to a vec for day 5:

day05 time: [2.4725 ms 2.4765 ms 2.4813 ms]

change: [-95.544% -95.536% -95.527%] (p = 0.00 < 0.05)

Performance has improved.

a 95% improvement !

This manifested a couple of times during this year. The short story here is that if you can afford the memory to store everything in a contiguous chunk of memory, do it.

Tweaking hash functions

The default HashMap and BTreeMap in the rust standard library are pretty good. However, the hash function can usually be made faster and there are crates like fnv and ahash which provide drop-in replacement of the standard hash map. I got a few percent improvements using these.

I haven't dug too deep into the tradeofs for the various hash functions there, but they definitely exists. Do measure and choose what works best for your usecase. This is the least significant improvement I found though, only shaving a few milliseconds here and there.

Things that did NOT work

For day 15, a simple dijkstra works well. I wanted to see if I could visit less node with an A star but this would either return the wrong result, or visit roughly the same number of node and be slower overall.

Conclusion

Striving to get an efficient solution each day was actually quite rewarding. Especially seeing the numbers go down when running new benchmarks. Primitive brains gets its dopamine any way it can.

The entire exercise is definitely quite time consuming though. I probably spent around 40h total. I'm not that great for these problems, and can get mired in unproductive rabbit holes.

But the end result is quite nice, and I definitely leveled up in a few areas like parsing or benchmarking.

Thanks to Nazral and mclovin for their proofreading.